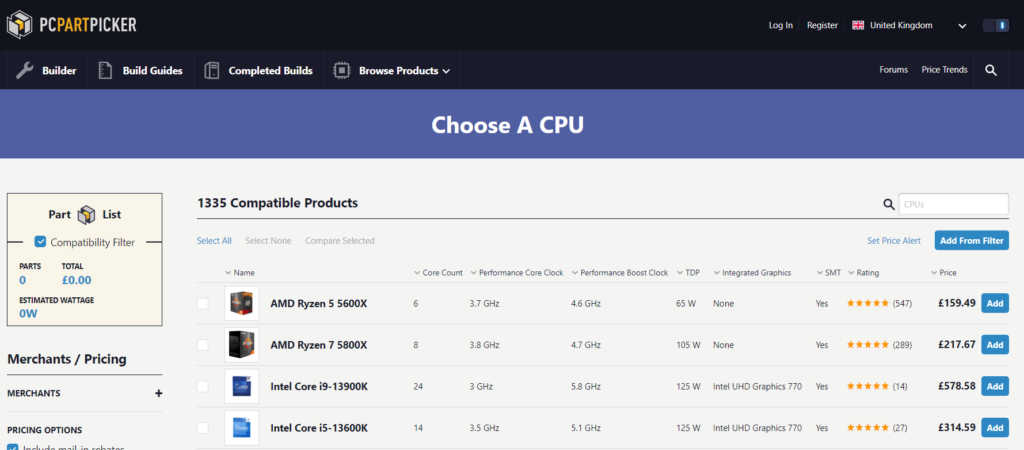

A quick browse of any retailer that sells computer components will illustrate that there are hundreds of different CPU’s available. A CPU is not just a standard component with a fixed set of features – each will be tailored to the needs of different types of user.

CPU manufacturers such as Intel, AMD and Apple balance the mix of features between each model of CPU they produce to either achieve a certain price, performance level or power consumption characteristics that suit where or how they will be used. For example a CPU designed to go in a desktop machine will be tailored for speed and performance, whereas a CPU designed for a tablet will be optimised for minimal power consumption and therefore will not be as quick or capable as a desktop CPU.

There are three key CPU characteristics which determine how capable each processor will be. These are:

- Clock speed

- Number of cores

- Amount of Cache memory

Before jumping straight in to these, it would be sensible to understand what we mean by “speed” and “performance” when referring to a CPU.

In this section (click to jump):

Measuring CPU Performance

When we talk about how fast a CPU is or how well it performs, what does that actually mean?

Performance is a broad term used to describe how quickly a computer can carry out common tasks such as office work, web browsing, video editing and content creation and playing games. How quickly your computer can carry out these tasks is actually dependent on a number of components, usually your storage device, CPU, graphics card and amount of available memory (RAM).

Concentrating solely on the CPU, performance refers to two main factors:

- How quickly can the CPU carry out program instructions?

- How many program instructions can the CPU carry out at the same time? We call this “parallel processing.”

Comparing CPU performance used to be quite simple, but modern processors are now complex dynamic devices that can adapt to the demands being placed on them. For example, a CPU now can change the speed it is running at or switch parts of itself off to save power when not in use.

There are also limiting factors that affect the speed of a CPU. You may have the fastest CPU in the world, but if you do not have enough RAM in your system to hold the data you are currently working on then you will see no advantage over a slower CPU.

To simplify things for GCSE, when we talk about performance or speed when referring to a CPU, we are focussed solely on how quickly the CPU can carry out program instructions in relation to another CPU.

Clock Speed

In the previous section, we learned that the CPU carries out a Fetch – Decode – Execute cycle repeatedly from power on to power off. You will have also learned that this cycle is controlled by a regular clock pulse or signal in the system which dictates when each part of the cycle happens.

Clock speed is the measurement of how often this clock pulse happens per second. This has a direct impact on how many FDE cycles may take place per second. Again, as a simplification for GCSE, we use the following relationship:

1 clock pulse = 1 FDE cycle

In computing we measure the rate or “frequency” of clock pulses in Hertz. When referring to the clock speed it is normal to say “the clock frequency is…” or even just “the frequency of a CPU is…”

1 Hertz or 1hz would mean 1 single cycle was carried out each second. 10hz would be 10 cycles per second and so forth. If a CPU did indeed run at 1hz then you’d have to wait several years for your computer to turn on, let alone do anything useful. Luckily for us, CPU’s use much, much higher clock frequencies.

CPU speeds (frequencies) have increased at absurd rates over time. This was neatly encapsulated in something called “Moores Law” after a beardy bloke called Gordon Moore who worked at Intel and made the prediction that the number of transistors (switches) inside a CPU would double every two years. This usually means that performance also doubled at that rate too, and for many, many years his law was correct (but not any more in terms of clock frequencies).

In 1980 you’d have measured your CPU performance in terms of Megahertz(Mhz), which sounds awesome and, to be fair, it was at the time. 1Mhz = 1 million cycles per second, which is quite something, right?

However, computing moves on rapidly and you’d be fairly depressed today if even your watch ran at 1Mhz.

Around the end of 1999 CPU speed approached the magical 1 Gigahertz(Ghz) mark. 1Ghz = 1000Mhz which is equal to 1 billion cycles per second. Now we’re talking. That was a magical moment. I remember reading about the first 1Ghz computer and feeling like it was some kind of amazing milestone. In reality it used up so much power you’d get a phone call from the national grid every time you turned it on and it ran hot enough to mean you no longer needed central heating. Great days.

Common measurements of CPU clock frequencies, then, are:

| 106 Hz (a million hertz) – The 1980’s-1999 | MHz | megahertz |

| 109 Hz (a billion hertz) – All modern CPU’s are in this range | GHz | gigahertz |

| 1012 Hz (a trillion hertz) – Not going to happen in my life time | THz | terahertz |

We are now up to about 5Ghz in a desktop CPU, which is crazy, especially when you realise that modern processors aren’t just 1 CPU at all, they’re 4, 6 or even 8 CPU’s rolled in to one, all capable of that clock speed.

What does this mean for performance? Well, as we increase the clock speed / frequency of a CPU in a computer, we increase the rate at which we can process instructions for a program. What does that mean? Well, it means that we can make things happen faster, get more done in the same amount of time as a slower processor – and that’s only a good thing, right?

Summary:

- Frequency or “clock speed” is measured in Hertz

- Commonly we use multiples of Megahertz meaning “million” or Gigahertz meaning “billion”

- 1hz = 1 cycle per second

- 1mhz = 1 million cycles per second

- 1ghz = 1 billion cycles per second

- The more cycles per second, the more instructions we execute per second, the faster our computer appears to run.

There are one or two down sides here:

- As clock frequency increases, the circuitry of the CPU begins to generate more heat. This can be avoided to an extent by making the circuitry even smaller, which also uses less power, but only to a point. This is difficult and obviously finite, you can only go so small before you are literally pushing single atoms around very thin bits of wire.

- When a program is running it will frequently pause for some reason. For example, waiting for the user to type something on the keyboard. During times like these, it doesn’t matter if you had a 100Ghz processor, it’s going to sit there doing nothing while it waits for your input! So you don’t always get a massive increase in performance from higher clock speeds because the CPU has to waste time whilst it waits for other things to happen.

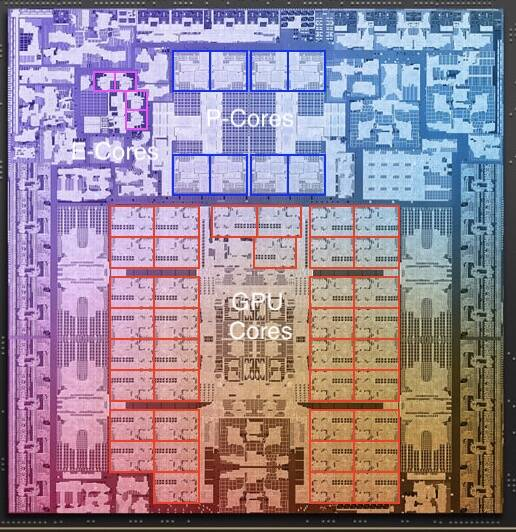

Number of Cores

Dual core, Quad core, Octo-Core are terms you may well have heard before and are often used in adverts for computers, but what does it actually mean?

Initially, processor manufacturers (Intel and AMD) attempted to improve processor performance by focussing on increasing clock speed. This makes sense – the whole purpose of the processor is to carry out instructions. If you can increase the speed at which this happens then you should get better and better system performance. This is true, but only to a point.

Around 2004/5 manufacturers began to hit some problems with this approach to processor design:

- Increasing clock speed usually increases the heat produced by a chip. To counter this, you have to make circuitry physically smaller. You can only go so small before you hit physical limitations.

- No matter how fast you make the clock speed, there will always be delays in processing as the CPU waits for human input, reading or writing of data or similar. Increasing clock speed does not solve this problem.

- Users were demanding computers that were capable of doing more and more things at the same time – this is called multitasking. A single CPU can only do one task at a time meaning a solution for this problem was needed.

The idea of putting more than one CPU in a system had existed for years in servers. Servers are large, powerful computers designed to cope with many users demanding resources and running programs at the same time. Consequently, servers needed to be “multi processor” computers, meaning they physically have multiple CPU’s installed in the same machine. The benefit of this is that tasks can then be split amongst those CPU’s – they can be doing different things at different times which gives us true multitasking.

The only downside with multi-processor systems was that they were complex to design, required software that knew how to split tasks amongst the chips effectively and were also massively expensive. The idea, though, was a great one.

AMD and Intel began to design chips which incorporated this multiple processor idea into a single CPU. The result was the “Multi Core CPU” meaning a single physical chip which contains multiple processing units.

We now have to update our definitions and understandings a little:

- CPU – A chip responsible for the processing of program instructions. May contain one or more cores.

- Core – A single processing unit inside a CPU. Each core is capable of independently carrying out a fetch decode execute cycle on its own.

This means a dual core processor is literally 2 CPU’s in one. It has two processing units inside it. A quad core has 4 and so on.

There are some awesome advantages here:

- 1 CPU can only carry out one task at a time. Therefore, more cores mean you can carry out more tasks in parallel (at the same time)

- The more cores you have, the more you can split tasks amongst those cores to make them run faster (but not all tasks can be split in this way)

- The more cores in a machine, the better it will be able to “multitask” meaning system performance is better when running many programs at the same time.

However this brought with it some complications:

- 2 cores does NOT mean twice as fast! 4 cores does not mean four times as fast! Do not put this in an exam, it’s not true!

- Programs and operating systems have had to be adapted so they can share tasks amongst cores and make use of the extra processing cores available to them. This is not a simple task.

- Not all tasks are able to be split in this way and can only ever run on one core at a time.

About that disadvantage… Why can’t all tasks be split?

Let’s sidetrack for an analogy.

Imagine I’m painting my room a lovely fetching shade of cat vomit green. My room is simple and boring and has 4 walls of equal size and shape. Let’s pretend each wall takes me 1 hour to paint.

If I paint the room myself it will clearly take 4 walls x 1 hour for each wall = 4 hours.

But does it matter what order I paint the walls in? Of course not. Painting one wall has nothing to do with painting any of the others. So I can much more quickly paint the room if I invite some friends round and bribe them with beer and food to paint my house for me.

If I increase the number of people painting to 4 then clearly… 4 people working for 1 hour will get the job done. I’ve reduced the time taken from 4 hours down to 1 by working in parallel. Winner. And I lied about the beer. My friends hate me.

Painting a room is a perfect task that can be split up and worked on separately and as a consequence the work gets done much, much quicker. This is the same in computing – if a task can be split like this, then we can get huge performance gains by splitting it over many cores (this is what graphics processors do really well and they have literally thousands of cores)

But lets take another example – washing my car:

I invite the same 3 friends round again, they’ve forgiven me so it’s ok.

This time I want them to help me wash my car (not wise, it’s so rusty it’s probably soluble).

I give each friend a job – one has the hose to rinse it down, one has a bucket and spunge, one has a bottle of car wax and the other has a nice chamois (what an incredible word) leather to dry it off and make it shiny.

The jobs have to be carried out in order:

- Rinse

- Wash with sponge

- Wax

- Dry and Shine.

There is no way my friends can actually help reduce the time this job takes. You cannot start waxing the car before it’s been washed, or you cant dry it before it’s been cleaned. Therefore my 3 friends just stand around bored, drinking beer (I had to this time, they’d never forgive me if I lied a second time.) There are some clever ways we can work more efficiently on this job, but we cannot do the tasks in parallel at the same time.

This is a perfect example of a job in computing that could not be improved by having more and more cores – some jobs simply cannot be split up to be made to work faster. In these scenarios, you simply want the highest clock speed you can get.

Cache

Cache is a small amount of memory, inside the CPU used to hold frequently accessed instructions and data. It runs at virtually the same speed as the CPU.

Many years ago, in the greasy mists of computing history, it became apparent that CPU speeds were far outpacing the speed that RAM could go. This is a problem because the CPU has no choice but to wait whilst RAM is fetching or storing the data it needs. As you know by now, in computing waiting is a bad thing in terms of performance.

Imagine a CPU is a really, really hungry and incredibly angry dictator. The CPU wants feeding, it wants instructions fed to it constantly and quickly. This enviable job belongs to RAM. If RAM can’t supply instructions fast enough then the hungry CPU becomes idle and when it gets idle it gets angry and no one likes an angry, idle CPU.

Being serious for a moment, this is a real problem. If RAM cannot send instructions quickly enough to the CPU then the CPU will sit idle, meaning it is doing absolutely nothing at all.

If RAM only works at half the speed of the CPU, then it is possible that for up to 50% of the time the CPU will be doing nothing. Why is that a problem? Well it suddenly makes your 1Ghz processor effectively a 500Mhz processor – half the speed.

Cache solves this problem.

Remember our previous definition of cache – it is a small area of memory, based inside the CPU which is used to hold instructions and data, but it is special because cache runs at near CPU speed meaning it can supply the CPU with an almost constant stream of data and instructions, preventing delays or pauses whilst data is fetched from main memory – RAM.

Cache provides some other bonuses in terms of CPU performance. Often programs execute the same instructions repeatedly (in a loop for example) so if we keep them in Cache the CPU can constantly access these instructions and data at full speed – we do not have to repeatedly fetch the same instructions from RAM and waste time waiting for that to happen. This is why in our definition we say that Cache holds instructions and data that are either about to be executed or are frequently executed.

Imagine Cache acting as a funnel, we can fill it up with lots of instructions and data and it’ll feed them into the CPU when it needs them, all we need to do is keep it topped up so it doesn’t run out. This is done using very clever circuitry and algorithms which we don’t go in to at GCSE, but to give you an overview, the CPU will predict what the program is likely to need next and fetch it from RAM before it is asked to and place it in Cache ready. Sometimes this can fail and isn’t perfect, but the overall performance gain is significant.

Advantages of Cache:

- Runs at CPU speed

- Keeps frequently accessed instructions and data so the CPU can work on them quickly

- Reduces the amount of time a CPU is waiting for instructions to be loaded from memory

- Reduces the frequency (number of times) the CPU has to access RAM

Disadvantages of Cache:

- Cache costs a LOT of money to make compared to RAM (this is why RAM isn’t as fast as your CPU!)

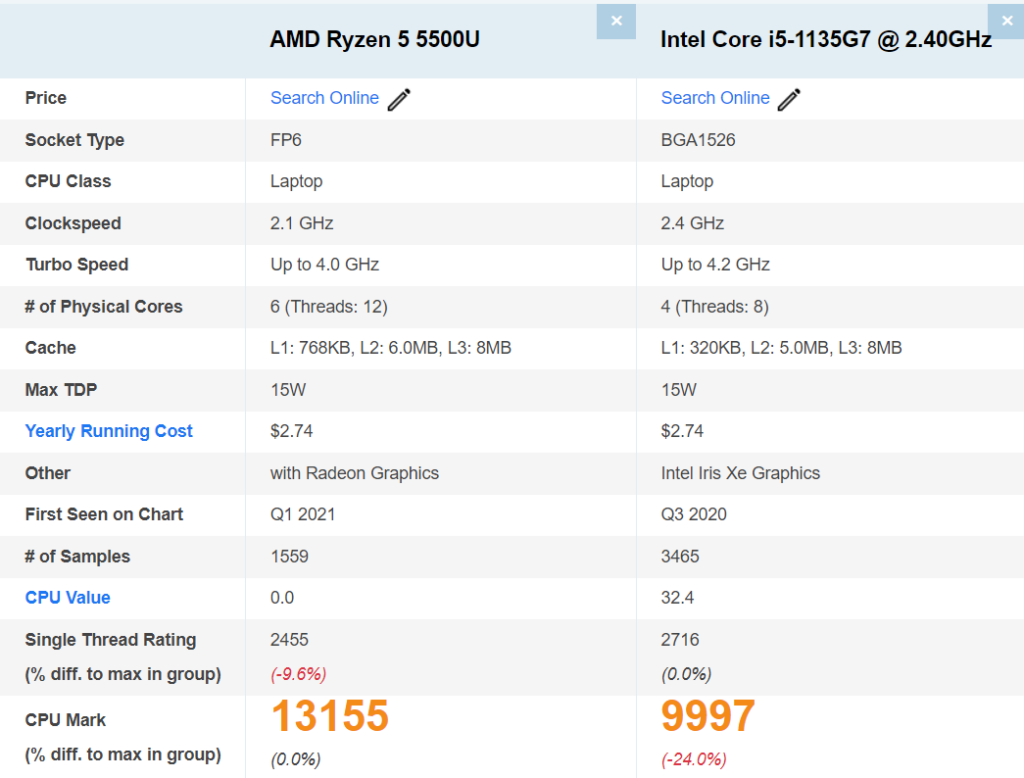

- Cache is limited, usually around 8mb (it takes up a lot of physical space inside the CPU, again a cost issue)

- If the instruction we want is NOT in cache then we have to wait to access RAM again

So, the bottom line is, cache improves performance because we are not waiting for instructions to be read from memory. The more cache we have, the less often we will need to access RAM.